By Sebastian Pina, OSCP, OASP, PNPT, PWPA, Security+

Senior Consultant, Technical Testing Services

Introduction

Healthcare organizations invest heavily in advanced cybersecurity technologies — firewalls, endpoint protection, and intrusion detection systems. Yet one of the biggest threats remains the human factor. Employees who are untrained, overworked, or pressured can be manipulated into making mistakes. Social engineering, especially over the phone (known as “vishing”), remains a powerful weapon for malicious actors. Protecting against this risk requires both smarter verification processes and ongoing employee training.

How Attackers Manipulate Employees

Social engineering attacks often exploit urgency. Attackers posing as IT staff, executives, or vendors create high-pressure scenarios, pushing employees to bypass normal verification procedures. Once the attacker has convinced the target of their legitimacy, they can extract sensitive data or credentials.

Real-World Example

In 2023, a regional health system in the Midwest reported a vishing incident where attackers impersonated IT support. A receptionist, pressured to resolve an “urgent email outage,” provided credentials that allowed access to the EHR system. The breach exposed thousands of patient records and led to a costly Office for Civil Rights investigation.

Impact

If attackers succeed in impersonating C-level leaders, IT admins, or clinicians, they can gain elevated privileges. This could allow them to lock users out, alter patient data, or disrupt care delivery. The reputational and regulatory consequences can be severe.

Preventative Measures

- Quarterly training on social engineering and vishing techniques

- Adhering to least-privilege access models

- Monitoring for unusual login activity across accounts

Weak Verification Policies

The Problem

Traditional verification methods rely on easily discoverable personal information—birth dates, addresses, or phone numbers. With vast data available online, these questions no longer provide real protection.

Real-World Example

In 2024, a behavioral health provider’s helpdesk was tricked when attackers used publicly available information from LinkedIn and people-search websites to answer verification questions. This gave attackers access to the provider’s internal email system, where they exfiltrated patient appointment data.

Impact

Weak verification lets attackers reset passwords or modify accounts. Once inside, they can access email, scheduling systems, and patient records. Even relatively small breaches can trigger HIPAA penalties and lawsuits.

Preventative Measures

- Replace generic verification questions with organization-specific challenge questions

- Review and update verification policies annually

- Require secondary verification for sensitive requests (e.g., sending a code to a known device)

AI Enhancing Voice Phishing

The New Threat

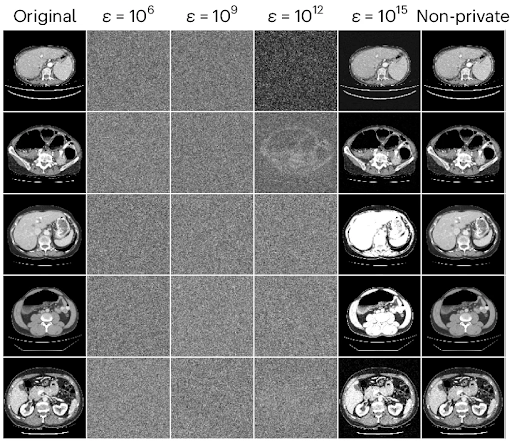

AI-powered voice cloning tools now allow attackers to replicate the voices of executives and physicians with alarming accuracy. A 20–30 second audio sample from a podcast, panel discussion, or YouTube video is often enough to generate convincing fakes.

Real-World Example

In 2025, a large hospital system in California reported an attempted attack where a voice clone of the CFO requested a wire transfer from the finance department. The request was flagged and stopped only because the staff member followed internal escalation procedures. The incident highlighted how AI-enabled impersonation can bypass traditional trust cues.

Impact

If successful, attackers could combine voice cloning with urgency tactics to compromise multiple accounts, extract PHI, or authorize fraudulent financial transactions. The risk is especially high for leaders and clinicians whose voices are widely available in public forums.

Preventative Measures

- Limit public release of long-form recordings of executives and key staff

- Implement voice passphrases for sensitive requests

- Train employees to be cautious with unusual voice patterns or requests that bypass standard processes

Conclusion

Phone scams in healthcare remain effective because they exploit the human element — urgency, trust, and weak verification. With AI-driven threats emerging, the stakes are even higher. By strengthening verification policies, investing in continuous employee training, and preparing for AI-enabled attacks, healthcare organizations can reduce their risk exposure and protect both patient trust and organizational reputation.

Phone scams and AI-driven social engineering are only getting more sophisticated. If your organization needs help strengthening verification policies, training employees, or preparing for new threats, contact Clearwater today. Our experts can help you protect patient trust and stay ahead of attackers.

The post The Human Factor: Why Phone Scams Are Still So Effective in Healthcare appeared first on Clearwater.