Compliance Considerations for Patient Care, Administration, and Financial Accountability

Written By Dr. Stacey Atkins, PhD, MSW, LMSW, CPC, CIGE

As Artificial Intelligence (AI) changes the healthcare landscape, compliance professionals must anticipate how AI alters how organizations manage corporate compliance. If your organization has implemented AI, your compliance program should now include cross-functional oversight committees to review and monitor AI deployments. Insights to considerations are provided below.

Introduction

Artificial Intelligence (AI) is revolutionizing the healthcare industry by enhancing diagnostic precision, increasing administrative efficiency, and fostering innovative patient care models. However, this advancement also demands rigorous scrutiny through a compliance lens.

Regulatory frameworks must evolve to match the complexity of AI-powered systems and ensure that patient rights, data integrity, and financial accountability remain protected. Compliance professionals must anticipate how AI intersects with laws such as HIPAA, the False Claims Act, and the 21st Century Cures Act to maintain ethical standards and institutional trust.

AI in Patient Care

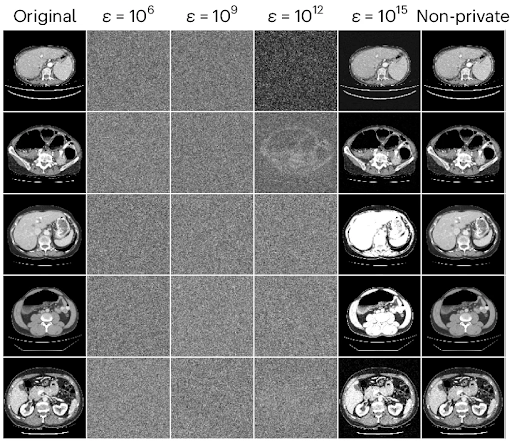

AI’s impact in clinical environments includes risk prediction models, virtual health assistants, and real-time monitoring tools. Clinical decision support tools that analyze large datasets can help clinicians identify trends and recommend personalized interventions. However, risks such as bias in algorithmic training data or lack of explainability in AI decisions pose threats to equitable care. These concerns underscore the importance of incorporating transparency, fairness, and accountability into the AI development lifecycle.

Compliance teams must work with clinical leaders to ensure AI tools meet FDA regulatory classifications, including premarket submissions and post-market surveillance, to ensure patient safety. As AI begins to play a larger role in recommending or even initiating treatment pathways, the responsibility to ensure appropriate validation, risk mitigation, and documentation grows significantly. Ethical considerations such as patient consent and clinical override procedures must also be addressed in policy. Recommendations for Physicians and Nurses to consider include:

- Active participation in training to understand AI tool functionalities and limitations;

- Remaining vigilant when evaluating AI-driven recommendations, using clinical judgment to identify potential biases or anomalies; and

- Report concerns or discrepancies promptly to compliance teams.

Administrative Use of AI

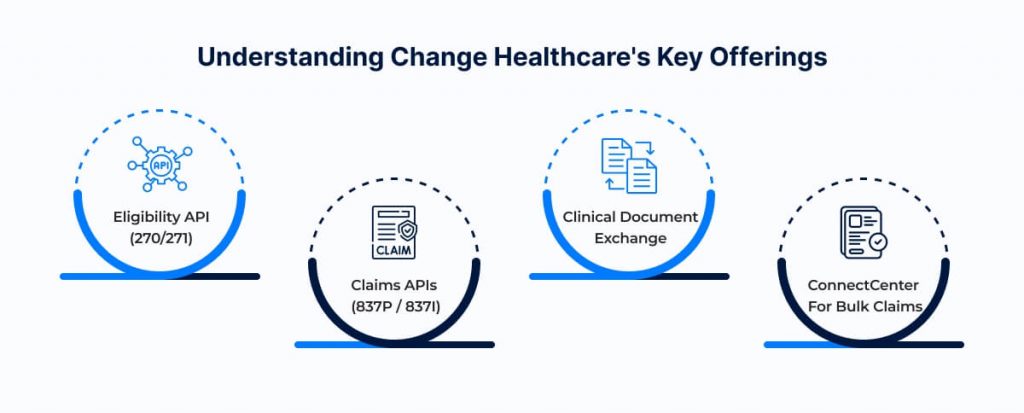

Administrative AI tools can significantly improve workflow efficiencies by reducing paperwork, automating prior authorizations, and managing patient scheduling. For example, AI-driven chatbots help route patient inquiries, while robotic process automation (RPA) can streamline claims processing. Despite these benefits, improper configuration or inadequate oversight of administrative AI systems may introduce compliance risks such as data breaches or noncompliant billing practices which result in unintended consequences.

Compliance programs should include cross-functional oversight committees to review and monitor AI deployments. These teams should ensure the use of AI aligns with payer contract requirements and is auditable during external reviews or governmental investigations. Furthermore, organizations should ensure their administrative AI tools do not inadvertently violate payer rules or documentation standards. System logs, user feedback, and integration testing are essential to ensure that automation supports, rather than compromises, compliance. Recommendations for Medical Billing Coders to consider include:

- Regularly review AI-generated billing and coding to verify accuracy against clinical documentation;

- Report discrepancies or patterns of errors immediately to compliance teams; and

- Participate in ongoing training to stay updated on coding standards and AI tool developments.

Information Blocking and AI

ASTP (the Assistant Secretary for Technology Policy/Office of the National Coordinator for Health IT), previously known as “ONC” is organizationally located within the Office of the Secretary for the U.S. Department of Health and Human Services (HHS). ASTP is the principal federal entity charged with coordination of nationwide efforts to implement and use the most advanced health information technology and the electronic exchange of health information.

AI applications that create or manage electronic health information (EHI) must comply with the ASTP’s information blocking rule. This includes tools that produce clinical summaries, generate patient documentation, or assist in diagnosis. Providers may invoke one of the eight permissible exceptions, but they must be able to justify their decisions with evidence. For example, the “Preventing Harm” exception may be valid if an AI-generated output could mislead or distress a patient.

Compliance officers must ensure that EHI-sharing policies are clearly documented; that patients have timely access to their data; and that systems are equipped to deliver requested data in accordance with federal requirements. Training and real-time decision support can help providers appropriately apply exceptions without violating the rule. Health systems must also monitor whether AI-generated data is accessible in usable formats and whether any AI-integrated tools restrict or delay data sharing in ways that could constitute noncompliance.

Recommendations for Allied Health Professionals to consider include:

- Ensuring familiarity with AI-driven documentation and patient interaction tools;

- Actively facilitate patient access to EHI generated by AI systems; and

- Reporting any barriers to data sharing or potential compliance concerns promptly.

Financial Accountability and Algorithmic Errors

AI tools involved in billing or coding introduce serious financial accountability considerations. A coding algorithm that misclassifies a procedure or service can lead to overpayments and potential allegations of fraud. In such cases, the provider may be held liable under the False Claims Act if they knew or should have known about the inaccuracy. The Office of Inspector General’s (OIG) compliance guidance urges healthcare organizations to implement auditing mechanisms tailored to detect errors, regardless of whether those errors were made by means of electronic, human or AI.

Establishing protocols for reviewing AI-generated claims, comparing them to manual audits, and investigating anomalies is essential to achieve and maintain compliance. Compliance programs should include procedures for escalation, correction, and refund when errors are found. AI tools used for utilization review or determining medical necessity must be subject to similar scrutiny. Additionally, organizations must foster a culture where staff feel empowered to report discrepancies without fear of reprisal, and where corrective action plans include AI system revalidation. Recommendations for All Healthcare Professionals to consider is to:

- Conduct regular audits comparing AI outputs with manual reviews, the monitor periodically to ensure compliance standards continue to be met;

- Participate in cross-functional teams to review AI-driven financial processes; and

- Engage in training on financial accountability, focusing on identifying AI-related discrepancies.

Conclusion

As AI continues to evolve, so must our compliance infrastructure to ensure that AI innovations align with ethical standards and legal obligations. With thoughtful integration, AI can support high-quality care, enhance administrative efficiency, and reduce costs. However, these benefits cannot come at the expense of accountability, transparency, or patient trust.

Organizations that invest in cross-disciplinary governance, risk management, and regulatory alignment will be best positioned to harness the promise of AI while safeguarding their mission and integrity. Compliance professionals must remain vigilant, agile, and collaborative to meet the demands of this fast-evolving frontier.

About the Author

Dr. Stacey R. Atkins, PhD, MSW, LMSW, CPC, CIGE

Dr. Adkins is a Compliance Specialist working as a team member in the Education Department of the American Institute of Healthcare Compliance. Her career spans leadership roles with the Office of the State Inspector General, Department of Behavioral Health and Developmental Services, and HRSA, among others.

References

- U.S. Food and Drug Administration (FDA). (2021). Artificial Intelligence and Machine Learning (AI/ML)-Based Software as a Medical Device (SaMD) Action Plan. https://www.fda.gov/media/145022/download

- Office of the National Coordinator for Health Information Technology (ONC). (2020). 21st Century Cures Act: Interoperability, Information Blocking, and the ONC Health IT Certification Program. https://www.healthit.gov/curesrule/

- U.S. Department of Health and Human Services, Office of Inspector General (OIG). (2021). Compliance Program Guidance. https://oig.hhs.gov/compliance/compliance-guidance/index.asp

- Office for Civil Rights (OCR), HHS. (2022). HIPAA and Health IT. https://www.hhs.gov/hipaa/for-professionals/special-topics/health-information-technology/index.html

- U.S. Department of Justice. (2023). False Claims Act Overview. https://www.justice.gov/civil/false-claims-act

- Centers for Medicare & Medicaid Services (CMS). (2023). Program Integrity Manual – Chapter 3: Verifying Potential Errors and Taking Corrective Actions. https://www.cms.gov/regulations-and-guidance/guidance/manuals/downloads/pim83c03.pdf

Copyright © 2025 American Institute of Healthcare Compliance All Rights Reserved

The post The Expanding Role of Artificial Intelligence in Healthcare appeared first on American Institute of Healthcare Compliance.