Hospitals, clinics, and medical offices in the United States are using AI to help with different tasks. These tasks include helping doctors make decisions and automating office work. One way AI is being used is to answer phones and help with front-office jobs. Companies like Simbo AI provide these services. But using AI in healthcare means you must keep patient data safe and follow privacy laws like the European Union’s General Data Protection Regulation (GDPR).

Even though GDPR is a law from the EU, it affects many U.S. medical offices. This is especially true if they work with patients from the EU or use AI tools based in other countries. Medical managers and IT staff in the U.S. need to know how to handle sensitive health data to follow GDPR rules. This article explains why data mapping and governance are important in keeping data private and following the law. It also shows how workflow automation supports safe AI use.

Why GDPR Matters for U.S. Healthcare Providers Using AI

GDPR started in 2018. It has strict rules about handling personal information, including health data. It requires clear permission from patients, gives patients rights to see, fix, or delete their data, and needs quick reporting if data is stolen. Breaking GDPR can lead to big fines—up to 4% of a company’s yearly income. This is a risk even for U.S. offices that work with people in the EU or use AI tools involving EU data.

Healthcare AI systems use lots of personal data such as age, health records, and other identifiers. This makes data vulnerable to theft or misuse if not protected well. So, following GDPR rules is important not just in Europe but also in the U.S., especially when AI tools help with patient communication or scheduling.

The Role of Data Mapping in GDPR Compliance

Data mapping means finding out what data you have, where it is saved, who can see it, and how it is used. For healthcare using AI, good data mapping is key to being clear and responsible.

Healthcare leaders and IT staff should track:

- What patient data is collected: This includes things like age, medical history, phone calls, AI interaction info, and any personal details handled by AI.

- Where the data is stored: Is it on-site, in the cloud, or with outside AI providers? Knowing this helps control data movement and stop unauthorized sharing.

- Who can access the data: Limits who can see sensitive information to only those allowed.

- How data is processed: Knowing each step makes sure processing follows GDPR rules, like using data only for needed reasons and not keeping too much.

Gagan Koneru, a cybersecurity expert, points out that data mapping helps meet GDPR demands. It can show where risks are and help with breach reports and consent checks. Since patients have rights to their data, data mapping lets healthcare find and manage data quickly for requests, which builds trust.

Data Governance Frameworks Ensuring Security and Compliance

Data mapping tells you what you have, but data governance sets the rules for handling data safely. HIPAA is the main U.S. law protecting health information. But GDPR has stricter rules, especially for AI that uses data across countries.

Good data governance for AI includes:

- Data Quality Control: Data must be correct and reliable. Wrong data leads to bad AI results.

- Security Measures: Both HIPAA and GDPR need strong encryption, tight access controls, and secure logins to prevent breaches.

- Audit Trails and Monitoring: Keeping detailed logs helps track who accessed or changed data and when. Regular checks find any weaknesses.

- Privacy by Design: Building data protection into AI from start to end keeps compliance over time.

- Employee Training: Teaching staff about GDPR and HIPAA lowers errors and supports privacy rules.

- Working with Privacy Experts: Consultants help follow changing laws and handle complex cases, especially those involving data sharing between countries.

Arun Dhanaraj, a data governance specialist, says that matching AI plans with governance rules helps spot and lower risks. Medical leaders should treat data governance as ongoing. New laws, like CCPA (California) and DPDP (India), are similar to GDPR. Following strong governance helps avoid legal trouble and keeps patient trust.

Managing Bias and Ethics in Healthcare AI Systems

Data governance also means making AI fair. Bias can happen if training data isn’t diverse or if AI models are poorly made. This can lead to wrong or unfair healthcare choices.

Matthew G. Hanna and others identify three kinds of bias:

- Data Bias: When AI training does not include diverse patient groups.

- Development Bias: Caused by choices in AI design and features picked.

- Interaction Bias: Comes from how AI is used and reported in clinics.

Healthcare providers in the U.S. should have ways to find and fix bias. This means using data from different groups, being open about AI design, and watching results all the time. Holding AI accountable helps ensure fair care and meets ethical expectations from the law and patients.

AI and Workflow Integration: Front-Office Automation and Compliance

AI is now common for jobs like answering phones and scheduling patients. Simbo AI provides phone automation that helps reduce work and improve communication.

But AI workflow automation must follow privacy and governance rules.

Medical offices should make sure that:

- Data from automated calls is kept safe: Recordings and personal info must be encrypted and follow HIPAA and GDPR.

- Patients give clear permission before data is collected.

- AI collects only needed data, nothing extra.

- Access to automated data is limited to authorized staff and AI parts.

- Systems are watched in real time to catch any privacy problems quickly.

- AI tools work with electronic health records to keep data correct and easy to manage.

Using AI automation reduces office work and can support better compliance by standardizing data handling. Simbo AI shows that AI needs to balance tech benefits with respect for laws and patient rights.

GDPR and Data Privacy Considerations for U.S. Healthcare Providers Using AI

The U.S. does not have laws exactly like GDPR. But American healthcare must follow GDPR rules when handling EU patient data or using EU-based AI providers. GDPR gives patients more power over their personal data, like the right to move data, be forgotten, or stop data processing.

Recommended GDPR compliance steps include:

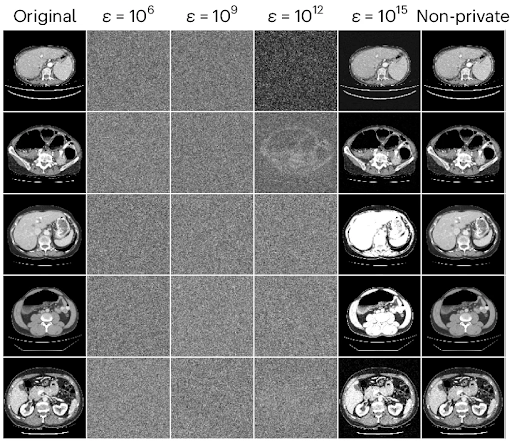

- Data Anonymization: Removing personal info so AI can use data safely without risking privacy.

- Regular AI Audits: Checking AI systems often to ensure privacy rules are followed and to find any leaks.

- Quick Breach Notifications: Reporting data breaches fast to regulators and affected people.

- Privacy Officers: Having a person responsible for data protection and GDPR compliance.

U.S. providers who understand GDPR rules can prepare better for audits and protect data more strictly. This leads to better service and trust.

Maintaining HIPAA and GDPR Compliance through Collaboration and Continuous Improvement

Making AI follow both HIPAA and GDPR is ongoing work. Practices should involve medical staff, IT, lawyers, and privacy experts together.

This teamwork helps:

- Create plans that connect tech use with privacy policies.

- Focus on the biggest risks to data protection.

- Explain clearly to patients how AI handles their data.

- Adjust to new AI rules in the U.S. and worldwide.

Cybersecurity expert Abhishek Bansal says using Identity and Access Management (IAM) keeps AI environments secure by letting only authorized users handle sensitive data.

Also, teaching staff about AI rules stops careless mistakes that could cause violations. Healthcare must balance new tools with good governance to keep patient data safe.

The Importance of Privacy Impact Assessments (PIAs) in AI Healthcare Deployments

Privacy Impact Assessments look closely at privacy risks when adding AI systems. They consider:

- What data is collected and how much.

- How AI processes the data.

- Possible weaknesses or risks.

- Gaps in following laws.

Doing PIAs early and often helps healthcare groups add protections like more encryption, better consent rules, or changed data keeping policies before problems happen.

Summary

U.S. healthcare providers using AI, like phone automation or clinical support, need strong data mapping and governance. Mapping patient data shows where it flows, which helps with GDPR compliance. Governance makes sure data is secure, used fairly, and legal.

Watching AI systems continually, reducing bias, and training staff all support responsible AI use.

By linking workflow automation with these rules, healthcare can work more efficiently while keeping patient data safe. Teams from different fields and privacy assessments also improve readiness for compliance.

Keeping up with changing laws in the U.S. (like HIPAA) and abroad (like GDPR) lowers legal risks and supports ethical AI use in handling healthcare data. Companies like Simbo AI show how AI can help healthcare while following privacy laws and protecting patient rights.

Frequently Asked Questions

What is the General Data Protection Regulation (GDPR) and why is it important for healthcare AI agents?

The GDPR is a European Union regulation established in 2018 to protect personal data and privacy of EU citizens. It mandates explicit consent, data subject rights, breach reporting, and strict data handling practices, which are critical for healthcare AI agents managing sensitive patient data to ensure compliance and safeguard privacy.

How do AI-driven healthcare systems pose privacy risks under GDPR?

Healthcare AI systems process large datasets containing Personally Identifiable Information (PII), such as biometric and health data. This heightens risks of data breaches, unauthorized access, and misuse, requiring strict adherence to GDPR principles like data minimization, transparency, and secure processing to mitigate privacy risks.

What are the key GDPR requirements healthcare organizations must focus on when deploying AI agents?

Healthcare organizations must ensure explicit consent for data processing, provide clear privacy notices, enable data subject rights (access, correction, deletion), implement data protection by design and default, securely store data, report breaches promptly, and appoint a Data Protection Officer (DPO) as required under GDPR.

How can data anonymization benefit healthcare AI agents in GDPR compliance?

Data anonymization helps protect patient identities by removing or masking identifiable information, allowing AI agents to analyze data while ensuring GDPR compliance. It reduces privacy risks and limits exposure of sensitive data, supporting ethical AI use and minimizing legal liabilities.

What role does data mapping play in GDPR compliance for healthcare AI?

Data mapping identifies what patient data is collected, where it resides, who accesses it, and how it is processed. This provides transparency and control, supporting GDPR mandates for data accountability and enabling healthcare organizations to implement effective data governance and compliance strategies.

How should healthcare providers ensure secure data handling for AI technologies under GDPR?

Providers must implement robust security measures such as encryption, access controls, regular security audits, and secure data transmission protocols (e.g., SSL/TLS). These controls protect healthcare data processed by AI from breaches and unauthorized access, fulfilling GDPR’s security requirements.

What are the expanding data subject rights under GDPR relevant to healthcare AI?

Healthcare AI must accommodate rights including the right to access personal data, correct inaccuracies, erase data (‘right to be forgotten’), data portability, and the ability to opt out of data processing. Systems must be designed to manage and respect these evolving rights promptly.

Why is employee training important for GDPR compliance in healthcare AI deployments?

Training ensures that healthcare staff understand GDPR principles, data privacy risks, and their responsibilities when handling AI-managed patient data. Frequent training fosters a culture of compliance, reduces human error, and helps maintain ongoing adherence to privacy regulations.

How can engaging privacy professionals enhance GDPR adherence in healthcare AI?

Privacy experts provide up-to-date regulatory guidance, assist in implementing best practices, conduct risk assessments, and help maintain compliance amidst evolving rules, ensuring healthcare AI systems meet GDPR standards effectively and ethically.

What strategies can healthcare organizations use to maintain GDPR compliance with AI as regulations evolve?

Organizations should conduct regular data audits, update privacy policies, enforce strong data governance, monitor AI systems for compliance, ensure transparency with patients, and liaise with regulators and privacy professionals to adapt quickly to regulatory changes and emerging AI-specific guidelines.

The post The Critical Role of Data Mapping and Governance in Managing Sensitive Healthcare Data for GDPR Compliance in AI Deployments first appeared on Simbo AI – Blogs.