In recent years, AI has moved from research labs into everyday healthcare work. AI systems now help with making diagnoses, planning treatments, handling paperwork, and managing insurance claims. These tools can make processes faster, but there are still big concerns about being clear and responsible when using AI.

Some states have made laws to control AI use in healthcare. For example, California, Colorado, and Utah require healthcare providers and insurers to tell patients when AI is used. They also say that licensed doctors must make the final decisions about patient care. This makes sure humans stay in charge.

There have been legal cases across the country about wrong use of AI by big healthcare payers. In July 2023, Cigna was sued for denying over 300,000 claims because their AI made decisions without proper review. Other companies like UnitedHealth Group and Humana are also facing lawsuits for using AI instead of human experts, causing harm to patients and breaking consumer rules.

At the federal level, rules about AI are unclear. President Biden issued an order to develop AI responsibly. Earlier, President Trump tried to undo some AI guidelines. This creates confusion. Because of this, state laws and following rules are very important when using AI in healthcare.

The Ethical Imperative: Balancing Innovation with Patient Rights

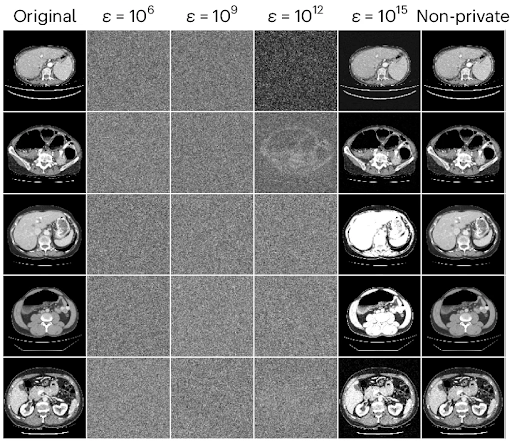

AI in healthcare works by looking at large amounts of patient information like health records, scans, and genetic data. Using so much data raises important ethical questions about privacy, consent, security, bias, and who is responsible for decisions.

Medical students and new healthcare workers say it is important to balance new AI tools with ethical care. They want AI to help patients, but not take away patient control or reduce doctor involvement.

Some main ethical concerns with AI in healthcare are:

- Patient Privacy and Data Security: AI accesses sensitive health data that must be protected. Security breaches, like the 2024 WotNot breach, showed weaknesses and risks to patient privacy.

- Informed Consent: Patients should know when AI is part of their care. Laws in Utah and California require doctors to inform patients about AI use so they can make choices.

- Algorithmic Bias: Many AI programs are trained mostly on data from white patients. This means AI may not work well for people from other groups and could cause wrong diagnoses.

- Transparency and Explainability: AI decisions can be confusing. Sometimes doctors and patients don’t know how AI came to its conclusions. Explainable AI (XAI) helps by showing the reasons behind AI suggestions, so people can trust it more.

- Human Oversight: AI should support doctors, not replace them. Doctors must keep using their judgment and not rely only on AI when making decisions.

HIPAA-Compliant AI Answering Service You Control

SimboDIYAS ensures privacy with encrypted call handling that meets federal standards and keeps patient data secure day and night.

The Importance of Human Oversight in AI Systems

Human oversight means people remain responsible for AI decisions to keep patients safe and reduce mistakes, biases, and loss of trust. States like California require licensed doctors to keep final say in medical decisions, especially for treatments and insurance claims. This way, AI cannot make decisions on its own without clinical checks.

Healthcare leaders should know that AI can help make processes easier but cannot work without human supervision. Oversight means checking AI results, considering patient details, and using professional judgment when AI and doctors disagree.

Hospitals and clinics are advised to build teams to manage AI use. This includes:

- AI Risk Assessments: Regularly checking AI tools for harm or unfair effects.

- Bias Audits: Testing AI to find and fix biases in data or software.

- Performance Monitoring: Watching AI accuracy and patient outcomes over time.

- Training and Education: Teaching staff about AI limits and ethics.

- Incident Reporting: Having clear steps to report and fix AI mistakes or problems.

These steps help keep AI use clear and safe while adding it into healthcare routines.

AI Answering Service Uses Machine Learning to Predict Call Urgency

SimboDIYAS learns from past data to flag high-risk callers before you pick up.

AI and Workflow Integration in Medical Practices

One common use of AI now is automating front-office tasks. Companies like Simbo AI focus on using AI for phone calls and answering services designed for healthcare. AI can handle appointment scheduling, patient reminders, insurance checks, and routing calls.

This can lower mistakes and reduce work pressure on staff. It also helps patients get faster, better service. But medical managers and IT must make sure automated systems follow laws about transparency and keeping patient data safe.

For example:

- Patient Communication: AI must tell patients when they are talking with a machine, as required by law in places like Utah and California.

- Data Security: All messages and calls must be encrypted and meet HIPAA standards to avoid data leaks.

- Human Backup: Automated phones should have ways to quickly send tricky calls to real healthcare workers.

- Transparency in AI Actions: Systems like Simbo AI provide reports to help managers check AI work quality.

By joining automated workflows with human checks, healthcare providers can work more efficiently without risking patient trust or ethics. This also lets clinical staff spend more time helping patients instead of doing simple tasks.

AI Answering Service Includes HIPAA-Secure Cloud Storage

SimboDIYAS stores recordings in encrypted US data centers for seven years.

Addressing Challenges and Building Trust in AI Healthcare Solutions

Even though AI shows promise, many healthcare workers are unsure about using it. Over 60% worry about poor transparency, weak data security, and unclear AI decisions. To make AI successful, trust must be built in several ways:

- Explainable AI (XAI): Helps doctors understand AI advice, which makes them more willing to use it.

- Strong Cybersecurity: Breaches like WotNot show the need for strong data protection against hackers.

- Ethical Frameworks: AI must follow laws that protect patient privacy and ensure fair treatment.

- Inclusive Data Practices: Training AI on diverse patient groups reduces bias and improves fairness.

- Interdisciplinary Collaboration: Bringing together ethicists, doctors, tech experts, and patients helps make balanced AI decisions.

- Ongoing Education: Health workers need training about AI limits, ethics, and best ways to use it.

By working on these areas, healthcare managers can safely guide their organizations through careful AI adoption.

Navigating State Regulations for AI Use in Healthcare

State rules have become very important because federal rules are unclear. Here are some key state laws affecting healthcare AI:

- California: Requires insurers and disability plans to have licensed clinicians supervise AI decisions. Providers must clearly tell patients when AI is used.

- Colorado: Insurers must prove their AI use is legal and fair by doing risk checks and reviews. AI must follow governance and transparency rules.

- Utah: Health professionals must clearly inform patients when AI tools are involved in their care.

- Texas: Recently settled lawsuits against AI vendors show the need for following rules and honest AI marketing.

Healthcare managers and IT staff must keep up with changing laws and regularly check AI tools to meet all rules. Setting up internal teams to oversee AI use can help organizations balance new technology with compliance.

Human Oversight and AI: A Practical Guide for Healthcare Leaders

Healthcare administrators, owners, and IT managers can handle AI well by:

- Understanding that AI is a tool to help, not replace, healthcare workers.

- Making sure patients are informed whenever AI is used in their care, following state rules.

- Creating committees to assess risks, watch AI performance, and keep ethical and legal standards.

- Training staff about AI strengths, limitations, and rule compliance.

- Working with IT to make sure data security meets or goes beyond required standards.

- Regularly checking AI systems for bias and accuracy to catch problems quickly.

- Planning clear steps to respond if AI causes errors or patient safety concerns.

Using these steps, healthcare leaders can include AI tools like Simbo AI’s front-office automation to improve operations while protecting patient rights and ethical care.

Final Thoughts

AI is changing healthcare in the U.S., from helping doctors to handling office tasks. But this change must be watched closely to avoid harm, keep patient trust, and follow state laws. Human oversight is key to balancing new technology with ethical care.

Healthcare leaders need to understand rules, operations, and ethical challenges to use AI well. Companies like Simbo AI provide useful tools to improve healthcare work, but using them requires clear thinking about transparency, security, and clinical judgment.

The future of AI in healthcare depends not just on smart software but on human professionals guiding its responsible and fair use. This balance will help create safer and better patient care in the future.

Frequently Asked Questions

What recent trends are impacting AI regulation in healthcare?

State legislatures are actively enacting laws to regulate the use of AI in healthcare, driven by consumer protection concerns, the need for accountability, and the growing oversight of AI applications in medical settings.

What triggered states to regulate healthcare AI?

Factors include technological advances in AI, consumer demand for accountability and transparency, and existing uncertainties in federal regulation regarding AI’s role in healthcare.

What is the significance of Executive Orders by U.S. Presidents on AI?

President Biden’s Executive Order focused on responsible AI development, while President Trump’s order attempted to rescind previous guidelines, creating uncertainty for healthcare AI regulations.

What are common legal challenges faced by healthcare payers using AI?

Recent class action lawsuits challenge claims denials by healthcare payers like Cigna, citing improper use of AI tools without adequate clinical review, violating consumer rights.

How have states like California responded to AI in healthcare?

California’s laws require that healthcare providers retain ultimate responsibility over medical decisions influenced by AI, and mandate transparency in AI’s use in patient communications.

What frameworks have states established for AI in health insurance?

Colorado has implemented regulations requiring health insurers to demonstrate non-discrimination in AI models and establish governance structures ensuring compliance with AI regulations.

How does Utah’s legislation specifically regulate AI usage?

Utah mandates that licensed healthcare professionals disclose AI usage to patients, ensuring transparency in communication about AI’s role in care provision.

What are the requirements for health and disability insurers in California regarding AI?

These insurers must implement strict procedures for AI utilization review, ensuring a licensed healthcare professional makes final medical necessity decisions.

What proactive measures should healthcare boards take regarding AI regulations?

Healthcare boards should monitor evolving state laws, assess AI compliance, and audit AI systems regularly to ensure equitable patient treatment and transparency.

What key themes are emerging in state legislation regulating AI in healthcare?

Key themes include ensuring consumer protection, promoting transparency in AI usage, requiring human oversight in medical decisions, and preventing algorithmic discrimination.

The post Human Oversight in AI-Driven Healthcare: Balancing Innovation with Ethical Medical Decision-Making first appeared on Simbo AI – Blogs.