Voice AI agents help automate patient interactions by understanding spoken commands and giving quick, natural replies without needing a screen or manual input.

These agents use several technologies to work well:

- Automatic Speech Recognition (ASR) changes spoken words into text data.

- Natural Language Processing (NLP) understands the meaning and context behind patient questions and requests.

- Text-to-Speech (TTS) creates voice responses that sound like a human.

For medical staff and IT teams, voice agents reduce work by automating tasks like appointment reminders, medication tracking, and follow-up calls.

This gives clinic workers more time to focus on complex care while patients get 24/7 communication access.

By 2025, studies expect about 90% of hospitals in the U.S. to use AI agents to improve patient care and support medical teams.

Early users have seen up to 30% lower costs, faster response times, and fewer patients waiting on hold.

Emotion Detection: Enhancing Patient Engagement

A new feature in healthcare voice agents is emotion detection. This helps AI systems analyze how a patient sounds—tone, pitch, and mood—to figure out feelings like stress or confusion.

This helps the AI give answers that are more caring and gentle.

This is important because many patients call with sensitive or emotional issues.

An AI that knows how a person feels can calm the patient or quickly send urgent calls to a human.

For example, emotion detection can help with mental health by hearing signs of depression or anxiety during symptom checks.

This lets healthcare workers step in earlier or provide extra help when needed.

Voice AI companies use advanced tools and data models to improve how the AI understands emotions.

Research shows patient happiness scores rise 20-30% when this feature is used.

Multilingual Support: Addressing Diversity in U.S. Healthcare

The United States has many languages and cultures.

More than 21% of people speak a language other than English at home.

This can make it hard for doctors to communicate clearly with all patients.

AI voice agents now support many languages. They can recognize and use several languages and even switch between them like people do in real talks.

This helps patients who do not speak English well.

Multilingual voice AI makes care easier for groups such as elderly immigrants or rural patients who speak little English.

It helps patients follow treatment plans and show up for appointments.

A study by Mayo Clinic found that voice agents helped increase preventive care appointments by more than 15%.

This was because patients were contacted in their preferred language.

Healthcare staff see smoother patient talks and better satisfaction by using voice AI that handles many languages.

Some systems can work with over 50 languages and adjust how they talk based on culture and formality.

Integration with IoT and Wearable Devices: Towards Seamless Connected Care

Healthcare providers use Internet of Things (IoT) devices and wearables to check patients’ health remotely.

These devices track heart rate, activity, medication use, and other health data.

Connecting voice AI with IoT and wearables lets patients talk to their devices hands-free.

They can get alerts and updates through natural speech.

This brings benefits like:

- Remote patient monitoring: Voice agents remind patients to take medicine or alert doctors when health data looks unusual.

- Better patient follow-through: Timely, personal voice alerts encourage healthy habits and follow-up care.

- Hands-free control: This is helpful for elderly or disabled patients managing devices like blood pressure monitors by voice.

- Operational efficiency: Doctors can get up-to-date patient information during calls, helping them make decisions faster and cut unneeded visits.

Many U.S. medical centers are adopting connected health devices, making this integration grow faster.

AI and Workflow Automation in Healthcare Voice Systems

Healthcare offices have many repetitive tasks that take time and affect patient satisfaction.

Voice AI agents automate front-office jobs like:

- Appointment scheduling: Patients can book, change, or cancel visits without a human, reducing wait times.

- Reminders: Automated calls or texts remind patients about visits, medications, or care steps, lowering missed appointments.

- Symptom triage: AI collects initial patient info and gives simple advice before passing complex cases to staff.

- Patient education: Voice assistants provide health info, discharge instructions, or wellness tips tailored to each patient.

Advanced voice AI can remember past conversations and handle multiple steps smoothly.

This helps the system get better at giving precise, personal service over time.

For healthcare providers, these AI tools bring:

- Up to 25% cut in admin work costs in places using AI voice bots.

- 40% faster call resolution times, with help handling complex or multilingual requests.

- Up to 50% shorter wait times, which improves patient experience and workflow.

Many large healthcare organizations use on-site AI solutions to keep patient voice data private and comply with HIPAA.

Meeting Challenges: Accuracy, Privacy, and Compliance

Healthcare voice agents offer benefits but face some challenges:

- Diverse accents and speech: The U.S. has many regional accents. Speech recognition must keep getting better.

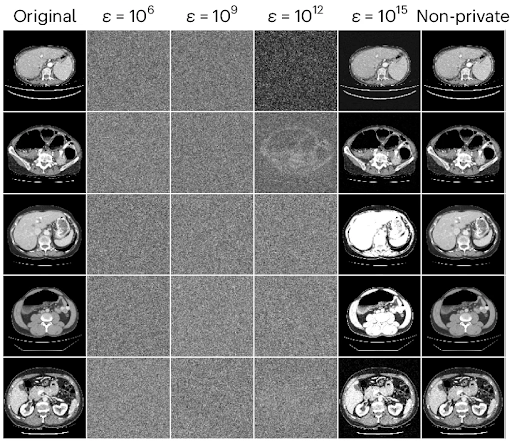

New techniques and training data are helping to close these gaps. - Data privacy and security: Protecting patient information is very important.

Voice AI follows rules like HIPAA and GDPR by encrypting data, anonymizing it, and getting user permission.

Many healthcare groups prefer on-site setups to control data better. - Background noise: Clinics can be noisy.

New noise-canceling methods improve accuracy in these real settings. - Ethical AI use: AI must be fair and avoid bias.

Diverse training and transparency help keep patient trust.

Specific Considerations for U.S. Healthcare Providers

Medical leaders and IT managers in the U.S. should keep in mind:

- Choose vendors who understand healthcare rules and multilingual voice AI well.

- Test how voice AI works with existing IoT and wearable devices for smooth operations.

- Look for platforms with emotion detection to improve patient talks and quickly find urgent cases.

- Consider solutions that run on-site or in a hybrid cloud to meet privacy laws while scaling AI.

- Train staff to use voice AI alongside human care, keeping a personal touch for tough cases.

- Track metrics like patient engagement, shorter wait times, and first-call resolutions to improve AI use.

By using these future healthcare voice AI trends, U.S. providers can improve patient talks, boost efficiency, and build care systems that work well for diverse patients and new technology.

Frequently Asked Questions

What are AI voice agents and how do they function?

AI voice agents are software systems that understand spoken commands and respond with synthesized speech, using speech-to-text transcription, natural language processing, and text-to-speech conversion to enable natural, screen-free interaction.

What core technologies power AI voice agents?

They rely on Speech-to-Text (STT) to transcribe voice, Natural Language Processing (NLP) to interpret intent and context, and Text-to-Speech (TTS) to generate spoken responses, creating fluid, human-like conversations.

What types of AI voice agents exist?

Types include rule-based agents performing simple commands, goal-driven agents managing multi-step tasks with context retention, and learning agents that adapt over time by learning from past interactions.

How are voice agents used in healthcare?

They automate appointment reminders, medication tracking, and follow-ups, improve patient engagement, support multilingual communication, reduce administrative workload, and provide 24/7 access, benefiting especially elderly or digitally limited patients.

What challenges do voice agents face in healthcare settings?

Challenges include handling diverse accents, background noise, emotional nuances, maintaining latency for natural conversation, ensuring privacy and compliance (e.g., HIPAA), and infrastructure limitations impacting usability.

Why is voice cloning important for familiarity in healthcare AI agents?

While not explicitly stated, voice cloning enhances familiarity by enabling personalized, consistent voice interactions that can build trust and comfort for patients, improving engagement and emotional connection.

How do voice agents improve accessibility in healthcare?

By supporting multilingual and regional dialects, enabling hands-free interaction, and providing natural speech interfaces, voice agents make healthcare more accessible to diverse and digitally less literate populations.

What future trends are shaping healthcare voice agents?

Emerging trends include expanded multilingual support, emotion detection to adjust responses, avatar integration for expressive digital presence, and IoT/wearable device integration for seamless healthcare interactions.

How do voice agents differ from chatbots?

Voice agents interact through spoken conversation powered by speech recognition and synthesis, whereas chatbots typically communicate via text-based interfaces.

How is privacy and security managed in healthcare voice AI?

Privacy is addressed through on-premise deployment options, anonymizing voice data, and ensuring compliance with healthcare regulations like HIPAA to protect sensitive patient information.

The post Future Trends in Healthcare Voice Agent Technology: Emotion Detection, Multilingual Support, and Integration with IoT and Wearable Devices first appeared on Simbo AI – Blogs.