An AI voice agent is a kind of smart software that can handle phone calls on its own. It understands normal language, gives answers, and uses automation tools and APIs to do tasks. Unlike old interactive voice response systems (IVRs) or scripted callbots, modern AI voice agents use advanced Large Language Models (LLMs), Speech-to-Text (STT), and Text-to-Speech (TTS) technologies. This lets them have more natural and flexible conversations. They work all day and night and can take on thousands of calls at the same time. This makes them useful for healthcare providers who get a lot of calls.

In healthcare, AI voice agents are already used to book patient appointments, answer common questions about office hours or procedures, make follow-up calls, check leads for health plans, and update patient records in Customer Relationship Management (CRM) systems like Salesforce and HubSpot. They can understand different accents, casual speech, interruptions, and hesitations. This helps them work well with many kinds of patients across the United States.

The Need for Improvement in Complex Healthcare Interactions

AI voice agents do well with simple and repeated calls. But healthcare calls can be sensitive, complicated, or emotional. Tasks like giving bad news, explaining medication side effects, or handling patient frustration need emotional understanding and careful talking. Current AI agents find these things hard. Also, noisy places or calls with many speakers can make these systems less accurate. Many healthcare places also serve patients who speak different languages, which is another challenge.

To fix these issues, research has worked on improving emotional speech synthesis and real-time reasoning. These technologies try to help AI voice agents talk more like humans. This improves the patient’s experience during hard or sensitive calls.

Emotional Speech Synthesis: Human-Like Conversations for Better Patient Engagement

In 2024, Text-to-Speech (TTS) technology improved a lot by using new artificial neural designs such as state space models (SSM) and diffusion-based models. These allow AI voices to sound more natural and show emotions. AI voice agents can change their tone, speed, and pitch to show feelings like empathy, concern, and reassurance.

This helps in healthcare because patients want more than facts—they want comfort and understanding. Emotional speech synthesis makes phone bots less robotic and more personal. It helps patients feel heard during calls. Controlling emotion also helps in sensitive cases like reminding patients about chronic care appointments or talking about billing and insurance questions.

According to industry research, platforms now use Speech Synthesis Markup Language (SSML) to carefully control emotional tone. This also works with multiple sensory outputs to make communication better. As AI voices get better at showing empathy, patients trust and like using automated phone services more in medical offices across the U.S.

Real-Time Reasoning Enhances Multi-Step Healthcare Conversations

Healthcare tasks often need more than one step. For example, if a patient wants to move an appointment, the AI must check open times, update the calendar, inform the provider’s office, and remind the patient about needed preparations.

Real-time reasoning means the AI can handle conversations with many turns. It understands the context, remembers key details over several replies, and takes different actions based on what it learns. In 2024, the three main AI parts—Speech-to-Text, LLMs, and Text-to-Speech—worked better together. Now, voice agents can listen, think, and reply smoothly.

New AI models like OpenAI’s GPT-4o and Llama 3.2 improved how well voice agents understand context and reason through talks. They also cut costs for processing language, making these tools cheaper and easier to use every day.

This lets the AI do many-step tasks without needing human help. It cuts down time spent on calls about things like insurance checks, medicine refills, or billing questions. So, real-time reasoning helps medical offices handle lots of calls without wearing out staff or raising costs.

Speech-to-Speech (S2S) Models: A Step Closer to Natural Conversations

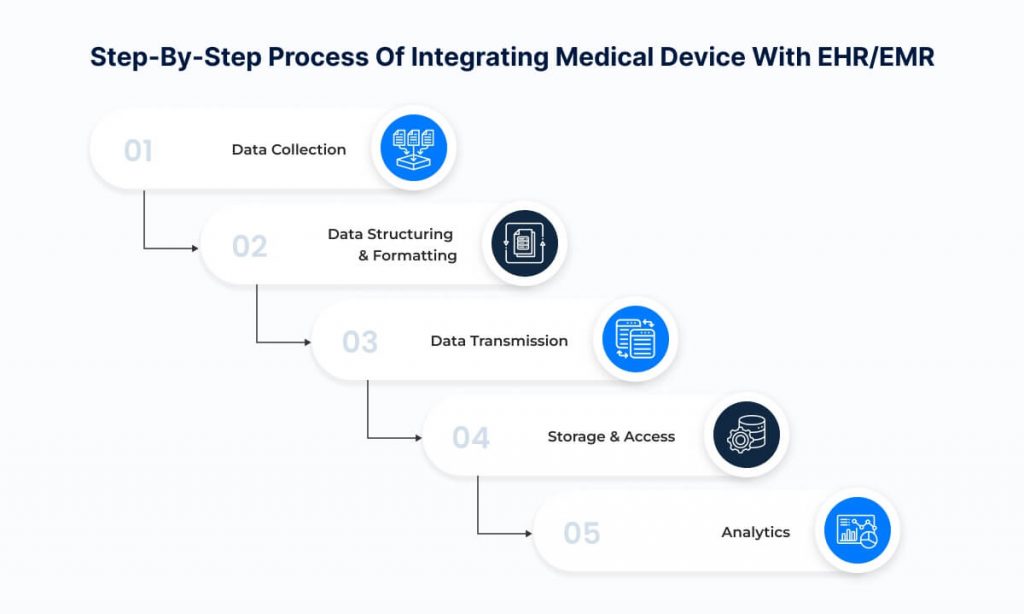

In 2024, speech-to-speech (S2S) AI voice models became popular. Unlike older methods that turn speech into text and then back into speech, S2S models change speech directly into new speech. This cuts down the waiting time during calls—from about 510 milliseconds before to as low as 160 milliseconds now. Human conversations usually have about 230 milliseconds delay.

S2S models keep emotional tone, natural speech rhythm, and small details like interruptions and people talking over each other. They copy how humans talk naturally. This helps in healthcare, where talks can be emotional and need the AI to listen and talk easily.

AI tools like Kyutai’s Moshi now use systems that let agents listen and talk at the same time. This makes conversations smoother and less robotic. For IT managers in medical offices, these new speech-to-speech features mean future AI phone systems can handle tricky patient calls better, even when feelings run strong.

Integration: AI Voice Agents and Workflow Automation in Healthcare

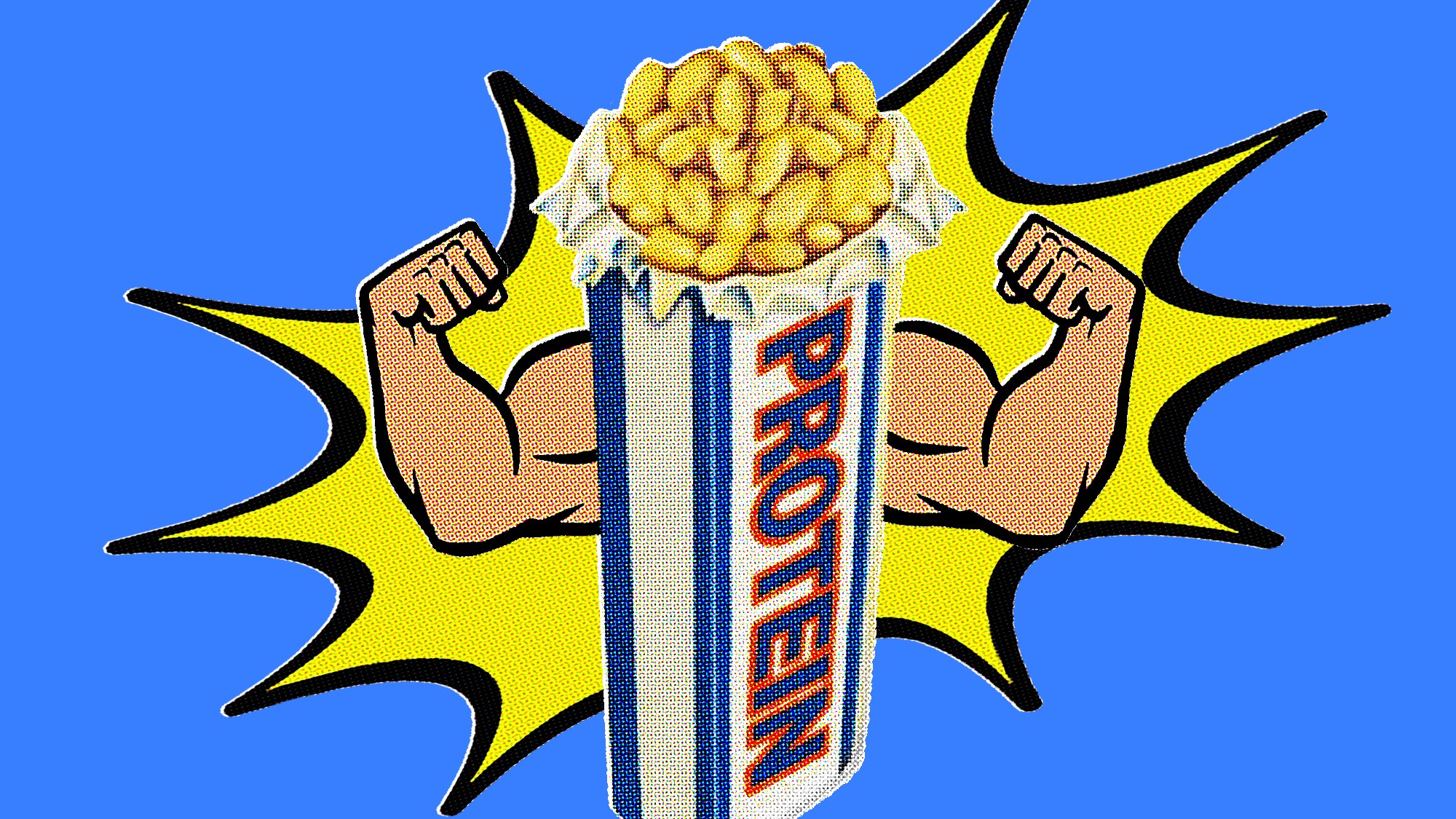

AI voice agents are very useful because they connect closely with healthcare work systems. They link to electronic health records (EHR), CRM apps like Salesforce and HubSpot, appointment calendars, billing and payment systems, and messaging tools.

This connection allows many tasks to happen automatically, such as booking appointments in real-time, managing follow-up calls, updating patient records, and checking insurance. In 2024, AI voice agents worked with over 2,000 apps to handle many tasks that used to need lots of staff time.

Automating these tasks helps healthcare teams focus more on in-person care and tough decisions. AI voice agents also reduce mistakes in manual data entry and speed up communication between departments.

Newer AI platforms use retrieval-augmented generation (RAG), which lets voice agents get data from many places during a call. This helps with hard tasks like checking patient eligibility for services or helping with billing problems.

Medical office administrators in the U.S. are using payment models where they only pay for successful task completions with AI voice agents. This way, they avoid hidden fees and only pay for useful services that cut down extra work.

Challenges and Considerations for Deploying AI Voice Agents in U.S. Healthcare

Even with these advances, some problems still exist. AI voice agents find it hard to work well in noisy places, like busy offices or patient homes that have background noise. This can cause more errors. Also, handling sensitive emotional talks, like giving bad news or calming anxious patients, still needs humans to help.

AI voice agents also have trouble supporting many languages well. This is important because many U.S. communities speak languages like Spanish, Chinese, and Tagalog. Voice agents today often struggle to switch languages during calls or understand different slang and dialects correctly.

Healthcare AI also raises ethical questions. Protecting patient privacy during API use is very important to follow HIPAA rules. Providers need to tell callers when they are talking to an AI and not a human, so patients know and agree.

Medical IT managers should check AI voice agent tools carefully for security, rule-following, and customization. As these systems get better, healthcare should pick vendors that know the law and can keep data safe.

Outlook for AI Voice Agents in U.S. Medical Practices

Experts believe that by 2025, voice AI will be more powerful, customizable, and easy to get. On-device voice AI, which works without the internet, will grow in healthcare. This offers better privacy and faster response times. New AI chips will make running these tools possible even in small clinics or home care with weak internet.

Emotional speech control will get better, allowing AI voices to change tone and speed based on how a patient sounds. Speech-to-speech models will manage complex health tasks more smoothly. This will lower the need for many human call center staff.

This progress will help medical offices in the U.S. answer high call volumes, reduce missed calls, and improve patient satisfaction with more natural and caring talks. For healthcare leaders and IT teams, using AI voice agents could mean lower costs, better efficiency, and easier access to care for many kinds of patients.

Summary

Advances in emotional speech synthesis, real-time multi-turn reasoning, and close links with healthcare systems are making AI voice agents more important in patient communication. As the technology improves, it offers practical ways to make complex healthcare calls better and faster in medical offices across the United States.

Frequently Asked Questions

What is an AI voice agent and why is it considered a revolution?

An AI voice agent is intelligent software that autonomously manages phone conversations by understanding natural language, formulating responses, and triggering actions through APIs. It revolutionizes call handling by scaling interactions massively, operating 24/7, reducing costs, and converting calls into actionable workflows, unlike traditional IVRs or scripted bots.

What tasks can AI voice agents handle effectively in healthcare?

AI voice agents can efficiently manage appointment scheduling, FAQs about procedures or office hours, patient follow-ups, lead qualification for health plans, and CRM updates. These agents understand natural language, adapt to various accents and speech patterns, and integrate deeply with healthcare systems to automate routine, high-volume calls.

How do AI voice agents benefit healthcare providers in handling overflow calls?

AI voice agents scale phone interactions by handling thousands of calls simultaneously, including after-hours and peak times. This reduces missed calls and wait times, lowers operational costs by automating routine inquiries, and ensures patients receive timely responses, enhancing overall healthcare service quality and access.

What are the current limitations of AI voice agents in sensitive healthcare interactions?

They struggle in noisy environments, can mishandle complex emotional or sensitive conversations like delivering bad news, and may fail to detect frustration or subtle tone changes. AI agents also risk generating inaccurate information (‘hallucinations’) without sufficient domain-specific knowledge, making human oversight essential in delicate healthcare calls.

How do AI voice agents integrate with existing healthcare systems?

AI voice agents connect with healthcare APIs, CRM systems like Salesforce or HubSpot, calendar and scheduling tools, payment processors, and support platforms. This integration enables seamless appointment booking, patient record updates, follow-ups, and multi-channel communication, ensuring workflow automation and personalized patient interactions.

What role does natural language understanding play in healthcare AI voice agents?

Natural language understanding allows AI agents to comprehend various accents, casual speech, interruptions, and paraphrasing. This enables patients to speak naturally without adapting their phrasing, improving patient experience, reducing confusion, and allowing agents to handle real-world, diverse conversation scenarios common in healthcare settings.

Why is multi-language capability important and what challenges do AI voice agents face?

Healthcare providers serve diverse populations requiring multi-language support. Current AI voice agents often underperform in dynamic language switching or when scripts are not designed for multi-language use, leading to degraded conversation quality. Improvements are expected soon, but multi-language fluency remains a challenge today.

What future advancements are expected to enhance AI voice agents in healthcare overflow call handling?

Future advancements include real-time multi-turn reasoning for complex conversations, more natural and emotional speech synthesis, seamless multi-language switching, smarter process handling with context retention, continuous learning from interactions, and speech-to-speech models capturing tone and emotion for more human-like, fluid calls.

How do AI voice agents impact operational costs and scalability in healthcare?

AI voice agents automate high-volume, routine calls at a fraction of the cost of human agents. They operate 24/7 without breaks, handling spikes in call volumes efficiently. This scalability reduces the need for large call centers, lowers overhead, and improves patient access to timely information and services.

What ethical considerations arise from using AI voice agents for healthcare overflow calls?

Ethical issues include ensuring accurate information delivery to avoid patient harm, maintaining patient privacy and data security during API integrations, transparent disclosure that callers are speaking to AI, and recognizing situations needing human intervention to handle emotional, sensitive, or complex healthcare conversations appropriately.

The post Future advancements in AI voice agents including emotional speech synthesis and real-time reasoning to improve quality of complex healthcare interactions first appeared on Simbo AI – Blogs.