You can test the feature described in this article on your own dataset using the CleanMyExcel.io service, which is free and requires no registration.

What is Data Validity?

Data validity refers to data conformity to expected formats, types, and value ranges. This standardisation within a single column ensures the uniformity of data according to implicit or explicit requirements.

Common issues related to data validity include:

- Inappropriate variable types: Column data types that are not suited to analytical needs, e.g., temperature values in text format.

- Columns with mixed data types: A single column containing both numerical and textual data.

- Non-conformity to expected formats: For instance, invalid email addresses or URLs.

- Out-of-range values: Column values that fall outside what is allowed or considered normal, e.g., negative age values or ages greater than 30 for high school students.

- Time zone and DateTime format issues: Inconsistent or heterogeneous date formats within the dataset.

- Lack of measurement standardisation or uniform scale: Variability in the units of measurement used for the same variable, e.g., mixing Celsius and Fahrenheit values for temperature.

- Special characters or whitespace in numeric fields: Numeric data contaminated by non-numeric elements.

And the list goes on.

Error types such as duplicated records or entities and missing values do not fall into this category.

But what is the typical strategy to identifying such data validity issues?

When data meets expectations

Data cleaning, while it can be very complex, can generally be broken down into two key phases:

1. Detecting data errors

2. Correcting these errors.

At its core, data cleaning revolves around identifying and resolving discrepancies in datasets—specifically, values that violate predefined constraints, which are from expectations about the data..

It’s important to acknowledge a fundamental fact: it’s almost impossible, in real-world scenarios, to be exhaustive in identifying all potential data errors—the sources of data issues are virtually infinite, ranging from human input mistakes to system failures—and thus impossible to predict entirely. However, what we can do is define what we consider reasonably regular patterns in our data, known as data expectations—reasonable assumptions about what “correct” data should look like. For example:

- If working with a dataset of high school students, we might expect ages to fall between 14 and 18 years old.

- A customer database might require email addresses to follow a standard format (e.g., user@domain.com).

By establishing these expectations, we create a structured framework for detecting anomalies, making the data cleaning process both manageable and scalable.

These expectations are derived from both semantic and statistical analysis. We understand that the column name “age” refers to the well-known concept of time spent living. Other column names may be drawn from the lexical field of high school, and column statistics (e.g. minimum, maximum, mean, etc.) offer insights into the distribution and range of values. Taken together, this information helps determine our expectations for that column:

- Age values should be integers

- Values should fall between 14 and 18

Expectations tend to be as accurate as the time spent analysing the dataset. Naturally, if a dataset is used regularly by a team daily, the likelihood of discovering subtle data issues — and therefore refining expectations — increases significantly. That said, even simple expectations are rarely checked systematically in most environments, often due to time constraints or simply because it’s not the most enjoyable or high-priority task on the to-do list.

Once we’ve defined our expectations, the next step is to check whether the data actually meets them. This means applying data constraints and looking for violations. For each expectation, one or more constraints can be defined. These Data Quality rules can be translated into programmatic functions that return a binary decision — a Boolean value indicating whether a given value violates the tested constraint.

This strategy is commonly implemented in many data quality management tools, which offer ways to detect all data errors in a dataset based on the defined constraints. An iterative process then begins to address each issue until all expectations are satisfied — i.e. no violations remain.

This strategy may seem straightforward and easy to implement in theory. However, that’s often not what we see in practice — data quality remains a major challenge and a time-consuming task in many organisations.

An LLM-based workflow to generate data expectations, detect violations, and resolve them

This validation workflow is split into two main components: the validation of column data types and the compliance with expectations.

One might handle both simultaneously, but in our experiments, properly converting each column’s values in a data frame beforehand is a crucial preliminary step. It facilitates data cleaning by breaking down the entire process into a series of sequential actions, which improves performance, comprehension, and maintainability. This strategy is, of course, somewhat subjective, but it tends to avoid dealing with all data quality issues at once wherever possible.

To illustrate and understand each step of the whole process, we’ll consider this generated example:

Examples of data validity issues are spread across the table. Each row intentionally embeds one or more issues:

- Row 1: Uses a non‑standard date format and an invalid URL scheme (non‑conformity to expected formats).

- Row 2: Contains a price value as text (“twenty”) instead of a numeric value (inappropriate variable type).

- Row 3: Has a rating given as “4 stars” mixed with numeric ratings elsewhere (mixed data types).

- Row 4: Provides a rating value of “10”, which is out‑of‑range if ratings are expected to be between 1 and 5 (out‑of‑range value). Additionally, there is a typo in the word “Food”.

- Row 5: Uses a price with a currency symbol (“20€”) and a rating with extra whitespace (“5 ”), showing a lack of measurement standardisation and special characters/whitespace issues.

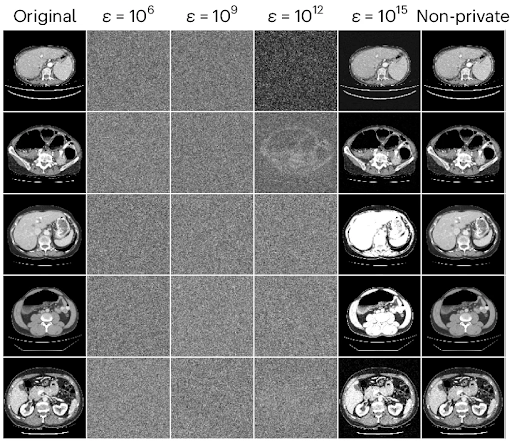

Validate Column Data Types

Estimate column data types

The task here is to determine the most appropriate data type for each column in a data frame, based on the column’s semantic meaning and statistical properties. The classification is limited to the following options: string, int, float, datetime, and boolean. These categories are generic enough to cover most data types commonly encountered.

There are multiple ways to perform this classification, including deterministic approaches. The method chosen here leverages a large language model (Llm), prompted with information about each column and the overall data frame context to guide its decision:

- The list of column names

- Representative rows from the dataset, randomly sampled

- Column statistics describing each column (e.g. number of unique values, proportion of top values, etc.)

Example:

| 1. Column Name: date Description: Represents the date and time information associated with each record. Suggested Data Type: datetime 2. Column Name: category 3. Column Name: price 4. Column Name: image_url 5. Column Name: rating |

Convert Column Values into the Estimated Data Type

Once the data type of each column has been predicted, the conversion of values can begin. Depending on the table framework used, this step might differ slightly, but the underlying logic remains similar. For instance, in the CleanMyExcel.io service, Pandas is used as the core data frame engine. However, other libraries like Polars or PySpark are equally capable within the Python ecosystem.

All non-convertible values are set aside for further investigation.

Analyse Non-convertible Values and Propose Substitutes

This step can be viewed as an imputation task. The previously flagged non-convertible values violate the column’s expected data type. Because the potential causes are so diverse, this step can be quite challenging. Once again, an LLM offers a helpful trade-off to interpret the conversion errors and suggest possible replacements.

Sometimes, the correction is straightforward—for example, converting an age value of twenty into the integer 20. In many other cases, a substitute is not so obvious, and tagging the value with a sentinel (placeholder) value is a better choice. In Pandas, for instance, the special object pd.NA is suitable for such cases.

Example:

| { “violations”: [ { “index”: 2, “column_name”: “rating”, “value”: “4 stars”, “violation”: “Contains non-numeric text in a numeric rating field.”, “substitute”: “4” }, { “index”: 1, “column_name”: “price”, “value”: “twenty”, “violation”: “Textual representation that cannot be directly converted to a number.”, “substitute”: “20” }, { “index”: 4, “column_name”: “price”, “value”: “20€”, “violation”: “Price value contains an extraneous currency symbol.”, “substitute”: “20” } ] } |

Replace Non-convertible Values

At this point, a programmatic function is applied to replace the problematic values with the proposed substitutes. The column is then tested again to ensure all values can now be converted into the estimated data type. If successful, the workflow proceeds to the expectations module. Otherwise, the previous steps are repeated until the column is validated.

Validate Column Data Expectations

Generate Expectations for All Columns

The following elements are provided:

- Data dictionary: column name, a short description, and the expected data type

- Representative rows from the dataset, randomly sampled

- Column statistics, such as number of unique values and proportion of top values

Based on each column’s semantic meaning and statistical properties, the goal is to define validation rules and expectations that ensure data quality and integrity. These expectations should fall into one of the following categories related to standardisation:

- Valid ranges or intervals

- Expected formats (e.g. for emails or phone numbers)

- Allowed values (e.g. for categorical fields)

- Column data standardisation (e.g. ‘Mr’, ‘Mister’, ‘Mrs’, ‘Mrs.’ becomes [‘Mr’, ‘Mrs’])

Example:

| Column name: date

• Expectation: Value must be a valid datetime. ────────────────────────────── • Expectation: Allowed values should be standardized to a predefined set. ────────────────────────────── • Expectation: Value must be a numeric float. ────────────────────────────── • Expectation: Value must be a valid URL with the expected format. ────────────────────────────── • Expectation: Value must be an integer. |

Generate Validation Code

Once expectations have been defined, the goal is to create a structured code that checks the data against these constraints. The code format may vary depending on the chosen validation library, such as Pandera (used in CleanMyExcel.io), Pydantic, Great Expectations, Soda, etc.

To make debugging easier, the validation code should apply checks elementwise so that when a failure occurs, the row index and column name are clearly identified. This helps to pinpoint and resolve issues effectively.

Analyse Violations and Propose Substitutes

When a violation is detected, it must be resolved. Each issue is flagged with a short explanation and a precise location (row index + column name). An LLM is used to estimate the best possible replacement value based on the violation’s description. Again, this proves useful due to the variety and unpredictability of data issues. If the appropriate substitute is unclear, a sentinel value is applied, depending on the data frame package in use.

Example:

| { “violations”: [ { “index”: 3, “column_name”: “category”, “value”: “Fod”, “violation”: “category should be one of [‘Books’, ‘Electronics’, ‘Food’, ‘Clothing’, ‘Furniture’]”, “substitute”: “Food” }, { “index”: 0, “column_name”: “image_url”, “value”: “htp://imageexample.com/pic.jpg”, “violation”: “image_url should start with ‘https://’”, “substitute”: “https://imageexample.com/pic.jpg” }, { “index”: 3, “column_name”: “rating”, “value”: “10”, “violation”: “rating should be between 1 and 5”, “substitute”: “5” } ] } |

The remaining steps are similar to the iteration process used during the validation of column data types. Once all violations are resolved and no further issues are detected, the data frame is fully validated.

You can test the feature described in this article on your own dataset using the CleanMyExcel.io service, which is free and requires no registration.

Conclusion

Expectations may sometimes lack domain expertise — integrating human input can help surface more diverse, specific, and reliable expectations.

A key challenge lies in automation during the resolution process. A human-in-the-loop approach could introduce more transparency, particularly in the selection of substitute or imputed values.

This article is part of a series of articles on automating data cleaning for any tabular dataset:

In upcoming articles, we’ll explore related topics already on the roadmap, including:

- A detailed description of the spreadsheet encoder used in the article above.

- Data uniqueness: preventing duplicate entities within the dataset.

- Data completeness: handling missing values effectively.

- Evaluating data reshaping, validity, and other key aspects of data quality.

Stay tuned!

Thank you to Marc Hobballah for reviewing this article and providing feedback.

All images, unless otherwise noted, are by the author.

The post An LLM-Based Workflow for Automated Tabular Data Validation appeared first on Towards Data Science.