At first, it feels incredible.

You point it at a churn dataset, run a training loop, and it spits out a leaderboard of models with AUC scores that seem too good to be true. You deploy the top-ranked model into production, wire up some APIs, and set it to retrain every week. Business teams are happy. No one had to write a single line of code.

Then something subtle breaks.

Support tickets stop getting prioritized correctly. A fraud model begins by ignoring high-risk transactions. Or your churn model flags loyal, active customers for outreach while missing those about to leave. When you look for the root cause, you realize there’s no Git commit, data schema diff, or audit trail. Just a black box that used to work and now doesn’t.

This is not a modeling problem. This is a system design problem.

AutoML tools remove friction, but they also remove visibility. In doing so, they expose architectural risks that traditional ML workflows are designed to mitigate: silent drift, untracked data shifts, and failure points hidden behind no-code interfaces. And unlike bugs in a Jupyter notebook, these issues don’t crash. They erode.

This article looks at what happens when AutoML pipelines are used without the safeguards that make machine learning sustainable at scale. Making machine learning easier shouldn’t mean giving up control, especially when the cost of being wrong isn’t just technical but organizational.

The Architecture AutoML Builds: And Why It’s a Problem

AutoML, as it exists today, not only builds models but also creates pipelines, i.e., taking data from being ingested through feature selection to validation, deployment, and even continuous learning. The problem isn’t that these steps are automated; we don’t see them anymore.

In a traditional ML pipeline, the data scientists intentionally decide what data sources to use, what should be done in the preprocessing, which transformations should be logged, and how to version features. These decisions are visible and therefore debuggable.

In particular, autoML systems with visual UIs or proprietary DSLs tend to make these decisions buried inside opaque DAGs, making them difficult to audit or reverse-engineer. Implicitly changing a data source, a retraining schedule, or a feature encoding may be triggered without a Git diff, PR review, or CI/CD pipeline.

This creates two systemic problems:

- Subtle changes in behavior: No one notices until the downstream impact adds up.

- No visibility for debugging: when failure occurs, there’s no config diff, no versioned pipeline, and no traceable cause.

In enterprise contexts, where auditability and traceability are non-negotiable, this isn’t merely a nuisance; it’s a liability.

No-Code Pipelines Break MLOps Principles

Most current production ML practices follow Mlops best practices such as versioning, reproducibility, validation gates, environment separation, and rollback capabilities. AutoML platforms often short-circuit these principles.

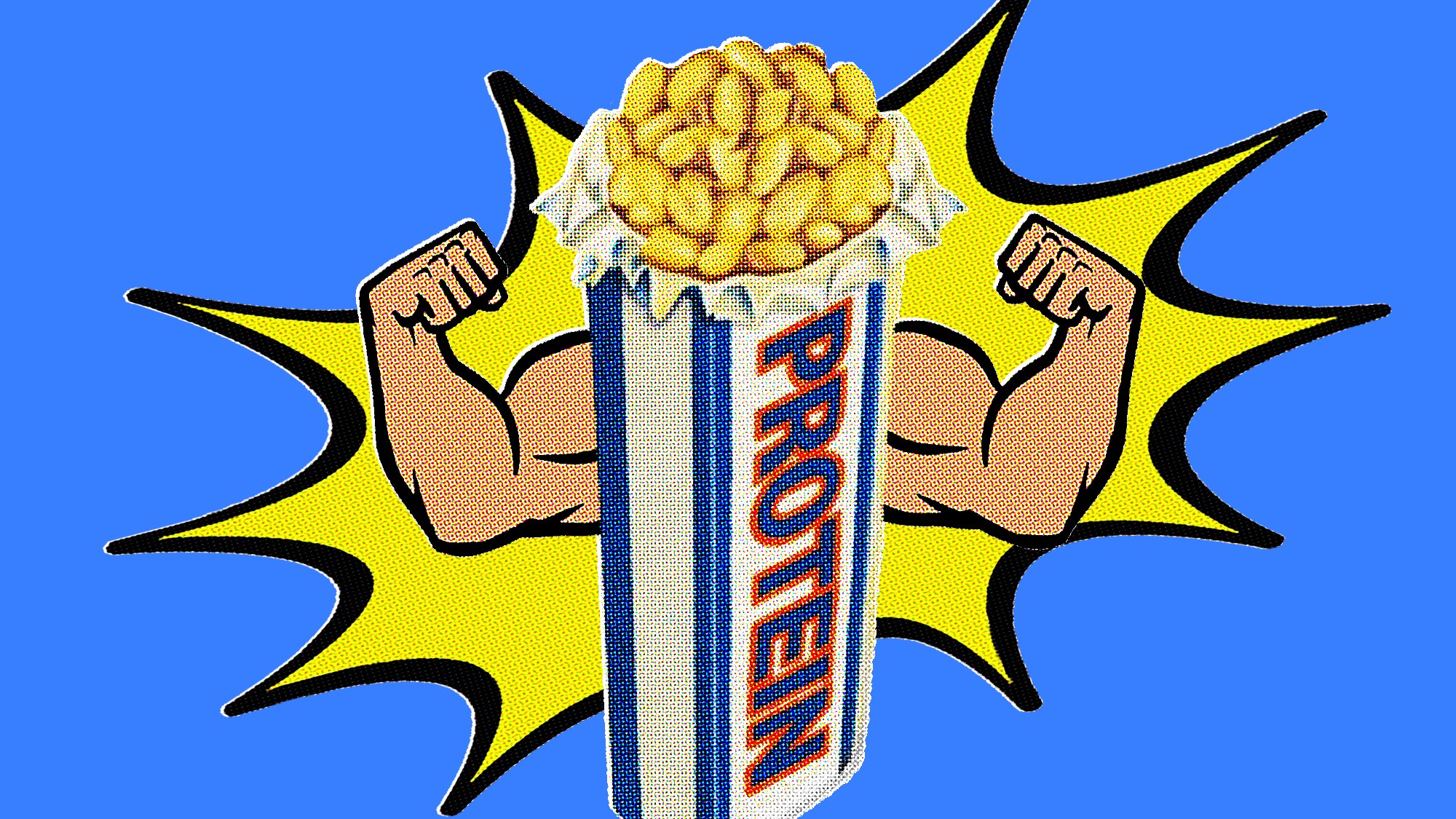

In the enterprise AutoML pilot I reviewed in the financial sector, the team created a fraud detection model using a fully automated retraining pipeline defined through a UI. The retraining frequency was daily. The system ingested, trained, and deployed the feature schema and metadata, but did not log the schema between runs.

After three weeks, the schema of upstream data shifted slightly (two new merchant categories were introduced). The embeddings were silently absorbed into the AutoML system and recomputed. The fraud model’s precision dropped by 12%, but no alerts were triggered because the accuracy was still within the tolerance band.

There was no rollback mechanism because the model or features’ versions were not explicitly recorded. They could not re-run the failed version, as the exact training dataset had been overwritten.

This isn’t a modeling error. It’s an infrastructure violation.

When AutoML Encourages Score-Chasing Over Validation

One of AutoML’s more dangerous artifacts is that it encourages experimentation at the expense of reasoning. The data handling and metric approach are abstracted, separating the users, especially the non-expert users, from what makes the model work.

In one e-commerce case, analysts used AutoML to generate churn models without manual validation to create dozens of models in their churn prediction project. The platform displayed a leaderboard with AUC scores for each model. The models were immediately exported and deployed to the top performer without manual inspection, feature correlation review, or adversary testing.

The model worked well for staging, but customer retention campaigns based on predictions started falling apart. After two weeks, analysis showed that the model used a feature derived from a customer satisfaction survey that had nothing to do with the customer. This feature only exists after a customer has already churned. In short, it was predicting the past and not the future.

The model came from AutoML without context, warnings, or causal checks. Without a validation valve in the workflow, high score selection was encouraged, rather than hypothesis testing. Some of these failures are not edge cases. When experimentation becomes disconnected from critical thinking, these are the defaults.

Monitoring What You Didn’t Build

The final and worst shortcoming of poorly integrated AutoML systems is in observability.

As a rule, custom-built ML pipelines are accompanied by monitoring layers covering input distributions, model latency, response confidence, and feature drift. However, many AutoML platforms drop model deployment at the end of the pipeline, but not at the start of the lifecycle.

When firmware updates changed sampling intervals in an industrial sensor analytics application I consulted on, an AutoML-built time series model started misfiring. The analytics system did not instrument true-time monitoring hooks on the model.

Because the AutoML vendor containerized the model, the team had no access to logs, weights, or internal diagnostics.

We cannot afford transparent model behavior as models provide increasingly critical functionality in healthcare, automation, and fraud prevention. It must not be assumed, but designed.

AutoML’s Strengths: When and Where It Works

However, AutoML is not inherently flawed. When scoped and governed properly, it can be effective.

AutoML speeds up iteration in controlled environments like benchmarking, first prototyping, or internal analytics workflows. Teams can test the feasibility of an idea or compare algorithmic baselines quickly and cheaply, making AutoML a low-risk starting point.

Platforms like MLJAR, H2O Driverless AI, and Ludwig now support integration with CI/CD workflows, custom metrics, and explainability modules. They are an evolution of MLOps-aware AutoML, depending on team discipline, not tooling defaults.

AutoML must be considered a component rather than a solution. The pipeline still needs version control, the data must be verified, the models should still be monitored, and the workflows must still be designed with long-term reliability.

Conclusion

AutoML tools promise simplicity, and for many workflows, they deliver. But that simplicity often comes at the cost of visibility, reproducibility, and architectural robustness. Even if it’s fast, ML cannot be a black box for reliability in production.

The shadow side of AutoML is not that it produces bad models. It creates systems that lack accountability, are silently retrained, poorly logged, irreproducible, and unmonitored.

The next generation of ML systems must reconcile speed with control. That means AutoML should be recognized not as a turnkey solution but as a powerful component in human-governed architecture.

The post The Shadow Side of AutoML: When No-Code Tools Hurt More Than Help appeared first on Towards Data Science.